In the realm of natural language processing (NLP), models have evolved from simple statistical methods to complex deep learning architectures. Among these, the T5 (Text-to-Text Transfer Transformer) stands out, heralding a shift in how we perceive and process textual data. This article delves deep into the intricacies of T5, shedding light on its architecture, applications, advantages, and the future of text processing.

1. Genesis of T5

Before the advent of T5, the landscape of natural language processing (NLP) was dominated by models such as BERT, GPT, and their assorted iterations. These models, undeniably groundbreaking in their capabilities, offered profound insights into the world of text processing. Yet, a palpable challenge persisted: the lack of a singular, versatile model capable of addressing a multitude of NLP tasks without the need for specialized architectures or intricate fine-tuning procedures.

Enter the visionaries behind T5. Recognizing the fragmented nature of existing models, they embarked on a quest to reimagine the very foundations of NLP. Their guiding principle was deceptively simple yet transformative in its implications: Could a singular model be architected and trained in such a way that it effortlessly caters to the vast spectrum of text-based tasks? This question wasn’t merely about amalgamating existing capabilities; it was about reconceptualizing the entire framework of text processing.

The answer lay in the innovative text-to-text transfer paradigm. Instead of treating each NLP task as a unique challenge requiring bespoke solutions, T5 introduced a radical shift in perspective. It posited that at its core, every NLP task—whether it revolves around translation, summarization, question answering, or any other textual endeavor—could be distilled down to a fundamental text-to-text transformation. By framing tasks in this universal format, T5 promised a future where the boundaries between disparate NLP challenges blur, replaced by a cohesive, unified approach.

2. Architectural Insights

T5 Transformer Structure

The foundation of T5’s capabilities rests upon the illustrious Transformer architecture, a groundbreaking design that revolutionized the world of deep learning, especially in the domain of NLP. Central to the Transformer’s prowess are its attention mechanisms, which allow the model to focus on different parts of the input text, capturing intricate patterns and relationships with unparalleled precision.

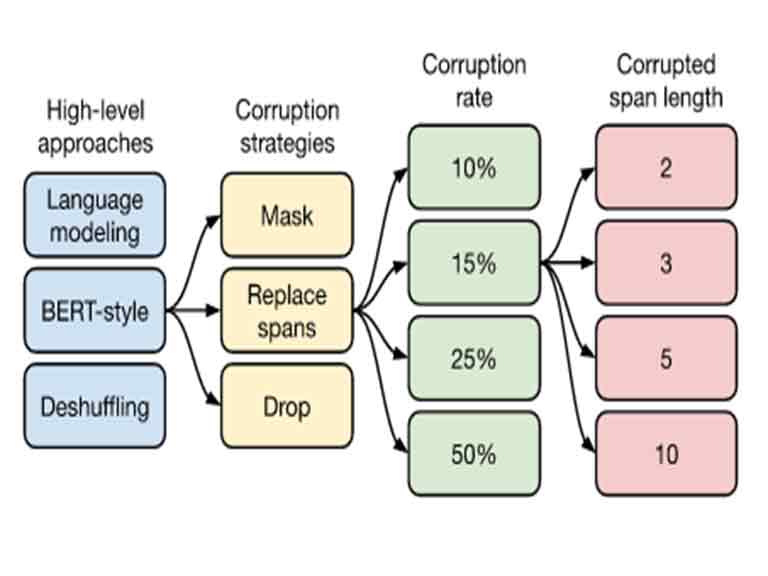

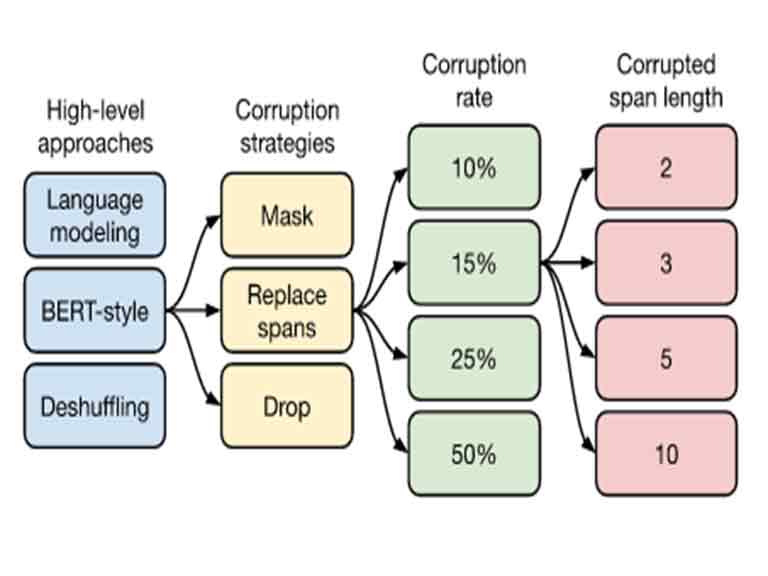

However, the Transformer architecture in T5 isn’t merely a replication of its predecessors. The architects of T5 recognized the need for innovation, leading to a pivotal evolution in the Transformer’s application. Instead of the conventional approach where models like BERT or GPT were meticulously fine-tuned for specific tasks in isolation, T5 embarked on a different trajectory. It was trained on an expansive and diverse array of NLP tasks, all concurrently, employing a unique text-to-text format.

This text-to-text paradigm is a masterstroke in simplifying the learning trajectory for T5. By framing every NLP challenge as a transformation task—from translating languages to answering questions—the model is equipped with a holistic understanding. This overarching perspective empowers T5 to generalize its learnings across tasks, extracting commonalities and nuances alike, thereby elevating its adaptability and performance metrics.

Shared Vocabulary

Complementing its innovative architecture, T5 introduces another groundbreaking feature: a shared vocabulary. Historically, NLP models relied on task-specific tokenizers and vocabularies, tailoring their linguistic representations to the nuances of individual tasks. While effective, this approach often led to redundancy and inefficiencies in the processing pipeline, as each model operated within its linguistic silo.

T5 disrupts this paradigm by adopting a unified vocabulary set for both input and output modalities. This shared vocabulary is a testament to T5’s commitment to efficiency and coherence. By utilizing a singular vocabulary, T5 eliminates the need for task-specific transformations or adaptations, fostering a more streamlined and integrated processing pipeline.

Moreover, this shared vocabulary strategy amplifies T5’s versatility. It allows the model to seamlessly transition between tasks, leveraging a consistent linguistic framework. Whether it’s processing input text or generating output, T5’s shared vocabulary ensures a harmonized linguistic representation, reinforcing its position as a beacon of efficiency and innovation in the NLP landscape.

3. Text-to-Text Paradigm

At the heart of T5’s transformative capabilities lies its ingenious text-to-text transfer paradigm. This approach reimagines the conventional methods of instructing NLP models. Instead of inundating the model with a multitude of task-specific cues or elaborate formats, T5 harnesses the power of simplicity and consistency. The input mechanism is elegantly structured: the model is presented not just with the raw text but is also accompanied by a succinct task descriptor, neatly encapsulated as a prefix. This design philosophy ensures clarity and specificity in task delineation; a translation request, for example, would manifest as “Translate: [English text]”, while a summarization directive might read “Summarize: [Long article]”. Once armed with this refined input, T5 springs into action, meticulously crafting the output text in alignment with the stipulated task. This standardized approach to input-output mapping is not merely a technical nuance; it’s the linchpin of T5’s unparalleled versatility, setting it apart in the vast expanse of NLP advancements.

4. Applications and Versatility

T5’s text-to-text approach has opened doors to a plethora of applications:

Translation: In an interconnected global landscape, linguistic barriers often hinder seamless communication and understanding. T5’s foray into the domain of translation has been nothing short of revolutionary. Its sophisticated training regimen, encompassing a diverse array of languages, dialects, and linguistic nuances, empowers T5 to produce translations that are not merely accurate but also contextually resonant. The model’s innate ability to grasp the subtleties of language ensures that translations capture the essence and nuances of the original content, fostering a deeper appreciation and understanding across linguistic divides.

Summarization: In today’s information-driven age, the ability to distill voluminous content into succinct summaries is invaluable. T5 rises to this challenge with remarkable prowess. Whether confronted with extensive research papers, lengthy articles, or comprehensive reports, T5 adeptly extracts the core essence, preserving the salient points while ensuring coherence and contextuality. This functionality has profound implications across sectors, facilitating expedited insights, informed decision-making, and enhanced productivity.

Question Answering: The pursuit of precise and relevant information retrieval from expansive datasets is a cornerstone of modern knowledge dissemination. T5’s intricate understanding of context, semantics, and syntactic structures positions it as a formidable solution in this arena. When presented with queries, T5 undertakes a meticulous analysis of the provided texts, discerning relevancy, and context before formulating accurate and concise responses. This capability streamlines information retrieval processes, empowering users with timely and relevant insights.

Conversational Agents: The evolution of conversational AI heralds a paradigm shift in human-machine interaction. T5, leveraging its comprehensive training on diverse textual datasets, exhibits a remarkable aptitude for conversational engagement. Whether addressing queries, providing recommendations, or engaging in casual dialogues, T5’s responses resonate with fluency, coherence, and contextuality, mirroring human-like conversational dynamics. This capability underscores T5’s potential in shaping the future of interactive AI interfaces, fostering intuitive, responsive, and immersive user experiences.

5. Advantages Over Predecessors

Unified Framework

A hallmark of T5’s architectural brilliance lies in its unified text-to-text transfer paradigm. This departure from the conventional task-specific architectures of its predecessors marks a paradigm shift in NLP model design. Historically, earlier models were crafted with specific tasks in mind, necessitating intricate architectures tailored to individual requirements. This fragmented approach not only fragmented the development landscape but also posed challenges in scalability and adaptability.

T5, with its universal text-to-text approach, dismantles these barriers. By encapsulating diverse NLP tasks within a singular framework, T5 obviates the need for task-specific architectures. This unification streamlines the development process, fostering synergies, and efficiencies. Developers and researchers are no longer constrained by the limitations of specialized architectures; instead, they operate within a cohesive ecosystem that fosters innovation, collaboration, and holistic advancements. This unity not only accelerates development cycles but also facilitates cross-pollination of ideas, paving the way for unprecedented breakthroughs in NLP.

Generalization

A testament to T5’s architectural robustness is its exemplary generalization capabilities. Unlike its predecessors, which often exhibited task-specific proficiencies, T5 transcends these confines, showcasing adaptability and versatility across a diverse spectrum of NLP challenges. This broad-spectrum proficiency is rooted in T5’s comprehensive training regimen. By concurrently training on an expansive array of tasks, T5 assimilates a rich tapestry of linguistic patterns, structures, and nuances.

This multifaceted training equips T5 with a nuanced understanding of language, enabling it to navigate complex linguistic landscapes with finesse and acumen. Whether it’s translation, summarization, question-answering, or any other NLP task, T5’s performance remains consistently commendable. This unparalleled generalization capability not only underscores T5’s architectural prowess but also amplifies its applicability, fostering solutions that resonate with accuracy, relevance, and contextual fidelity.

Efficiency

In the realm of computational models, efficiency is a coveted virtue, often dictating the feasibility and viability of real-world applications. T5, with its astutely designed architecture, shines brightly on this front. A salient feature of T5’s design is its shared vocabulary—a departure from the task-specific tokenizers employed by its predecessors.

This shared vocabulary fosters coherence and consistency across tasks, optimizing memory utilization and computational resources. The absence of task-specific tokenizers streamlines the processing pipeline, minimizing redundancy, and enhancing computational throughput. Moreover, T5’s streamlined architecture, devoid of superfluous complexities, further augments its efficiency quotient.

The implications of T5’s efficiency are manifold. In real-time applications, where computational resources are often at a premium, T5 emerges as a beacon of reliability. Its optimized architecture ensures swift response times, seamless integrations, and robust performance, positioning T5 as an invaluable asset for applications that demand agility, responsiveness, and reliability.

6. Challenges and Limitations

The emergence of T5 in the realm of natural language processing (NLP) has undeniably reshaped the contours of what’s achievable in textual applications. Its architectural prowess, versatility, and transformative capabilities have positioned T5 at the vanguard of NLP innovations. However, like all technological advancements, T5 is not devoid of challenges and limitations. This section endeavors to elucidate these intricacies, offering a holistic perspective on T5’s journey, aspirations, and areas of contention.

Complexity

At the heart of T5’s capabilities lies its intricate architecture—a confluence of advanced algorithms, neural network layers, and sophisticated training paradigms. This complexity, while instrumental in fostering T5’s unparalleled proficiency, comes with its set of challenges. The sheer magnitude of T5’s architecture necessitates substantial computational resources, encompassing high-performance processors, expansive memory configurations, and specialized infrastructure.

For organizations and developers operating within resource-constrained environments, deploying T5 poses considerable challenges. The infrastructural demands, both in terms of hardware and associated costs, can be prohibitive, limiting accessibility and scalability. This complexity-driven constraint underscores the need for innovative solutions, optimizations, and resource-efficient variants of T5, ensuring that its transformative potential is accessible across diverse operational landscapes.

Fine-Tuning

T5’s universal text-to-text framework heralds a paradigm shift, promising versatility across a myriad of NLP tasks. However, achieving optimal performance across these tasks is not a straightforward endeavor. While T5’s foundational training imparts a broad spectrum of linguistic competencies, fine-tuning on specific datasets remains imperative for task-specific refinements.

Fine-tuning, though essential, introduces complexities of its own. It necessitates meticulous dataset curation, domain-specific optimizations, and iterative refinements—all of which demand expertise, time, and computational resources. For organizations and researchers, this translates to extended development cycles, heightened computational costs, and a steep learning curve. Furthermore, the efficacy of fine-tuning is contingent on the quality, diversity, and representativeness of the datasets employed, introducing variability and unpredictability in performance outcomes.

Interpretability

In an era characterized by data-driven decision-making and algorithmic interventions, the interpretability of models like T5 assumes paramount importance. While T5’s architectural intricacies enable unparalleled proficiency in NLP tasks, they also obscure the underlying decision-making processes, rendering the model’s outputs as black-box phenomena. This opacity raises profound concerns, particularly in critical applications where transparency, accountability, and trustworthiness are non-negotiable imperatives.

The challenge of interpreting T5’s decisions is multifaceted. Its vast neural network layers, intricate attention mechanisms, and complex interconnections render traditional interpretability techniques inadequate. As a result, stakeholders grapple with uncertainties, ambiguities, and potential vulnerabilities, impeding informed decision-making and fostering apprehensions regarding algorithmic reliability.

Addressing the interpretability challenge necessitates concerted efforts across the NLP community. It calls for the development of innovative interpretability frameworks, tools, and methodologies tailored to deep learning behemoths like T5. By unraveling the intricacies of T5’s decision-making processes, stakeholders can foster transparency, enhance accountability, and engender trust, paving the way for responsible AI deployments.

Future Implications and Innovations

The ascent of T5 within the natural language processing (NLP) domain heralds a transformative phase, redefining benchmarks and catalyzing novel avenues of exploration. As the NLP community grapples with the multifaceted implications of T5’s capabilities, the horizon brims with promising innovations and paradigmatic shifts, each poised to sculpt the future trajectory of AI and NLP. This section navigates the unfolding landscape, offering insights into the prospective innovations and considerations that underscore T5’s evolutionary journey.

Efficient Architectures

While T5’s architectural intricacy bestows upon it unparalleled proficiency, it concurrently engenders substantial computational demands. Recognizing this dichotomy, the NLP community is fervently engaged in endeavors to sculpt more efficient variants of T5. These endeavors traverse diverse avenues, from algorithmic refinements and architectural optimizations to hardware-accelerated implementations.

The objective is twofold: to mitigate the computational overhead associated with T5’s deployment and to enhance its scalability across diverse operational landscapes. By striking a harmonious balance between performance and computational efficiency, these innovative variants endeavor to democratize access to T5’s transformative capabilities, fostering inclusivity and accessibility within the broader AI ecosystem.

Multimodal Integration

The integration of disparate modalities—text, images, audio—represents a tantalizing frontier in AI research, promising synergies that transcend unimodal capabilities. T5, with its foundational prowess in text processing, stands poised at the confluence of this multimodal renaissance. Researchers envision cohesive AI systems wherein T5 seamlessly collaborates with image and audio processing modules, engendering holistic AI architectures that encapsulate diverse facets of human cognition.

This multimodal integration heralds a new era of AI systems—systems that perceive, comprehend, and interact with the world in a multisensory manner. From immersive virtual environments and interactive media platforms to assistive technologies and autonomous systems, the implications of this integration are profound, paving the way for AI systems that resonate with human-like perceptual and cognitive capabilities.

Ethical Considerations

As T5 and analogous models proliferate across diverse domains and applications, the contours of ethical discourse expand, necessitating introspection, vigilance, and proactive interventions. Foremost among these considerations is the pervasive specter of bias—a concern amplified by T5’s vast training data and intricate decision-making processes. Addressing bias mandates rigorous scrutiny of training data, algorithmic transparency, and accountability frameworks, ensuring that T5’s outputs remain equitable, unbiased, and representative.

Beyond bias, the ethical discourse encompasses broader considerations, including data privacy, algorithmic accountability, and societal implications. As T5 permeates critical sectors—healthcare, finance, governance—the imperative to navigate these ethical nuances with diligence and foresight becomes paramount, safeguarding against potential misuse, exploitation, and inadvertent harm.

T5’s trajectory within the NLP landscape is imbued with transformative potential and profound implications. As researchers, developers, and stakeholders navigate this evolving landscape, a collective commitment to innovation, responsibility, and ethical stewardship remains pivotal, ensuring that T5’s journey resonates with integrity, inclusivity, and equitable progress.

Asher Molina